use tempdb

-- Create a test table

create table BulkTest

(TestKey INT NOT NULL,

TestData NVARCHAR(50) NULL,

CONSTRAINT BulkTest_PK_Constraint PRIMARY KEY (TestKey ASC)

)

-- Add some test data

INSERT INTO BulkTest

(TestKey, TestData)

VALUES

(1, 'This is just some test data')

INSERT INTO BulkTest

(TestKey, TestData)

VALUES

(2, 'This is some more test data')

INSERT INTO BulkTest

(TestKey, TestData)

VALUES

(3, 'It is important to have test data')

-- perform bulk Export using bcp command line utility

-- bcp tempdb.dbo.BulkTest out BulkTest.dat -T -c -S ServerName\SQLExpress

delete from BulkTest

-- perform bulk Import using bcp command line utility

-- bcp tempdb.dbo.BulkTest in BulkTest.dat -T -c -S ServerName\SQLExpress

select * from BulkTest

Tuesday, December 06, 2005

Answer to question posed at VSLive

The thing I was most nervous about at my VSLive presentation was question time. I had an ingenious plan to avoid question time... I had timed my presentation, and it went for just over 1 hour, but in the words of a famous poet... "The best layed plans of Mice, Men (and Geeks) gang aft aglay". People from the audience stopped me mid-way through to ask questions. Most of the questions I was able to answer, but there was one that I said "I think the answer is yes, but I'll get back to you". The question was... "Does SQL Express support bulk copy?", and after some testing the answer is "Yes". As mentioned in my presentation (slides available here) SQL Express does not support SSIS (The replacement for DTS), however after testing, the bcp utility works fine. The following script can be used to verify that both import and export work fine.

Saturday, December 03, 2005

VSLive Seminar post mortem

Well, my VSLive presentation was on Wednesday, and I personally was quite pleased with how it all went. I must confess, I was a little nervous, but I didn't let my nerves get the better of me. I had really positive feedback from people after the seminar, and even one request for the source code for my Photo Album Demo... enjoy! The demo sort of loses a bit by distributing it in this form, as it was more about the process of building it and displaying the Visual Studio integration features of SQL Server Express. There are only 5 lines of user written code in the project, and they are all to do with implementing Drag-Drop on the picture box so that you can add the photos to the database, the rest is all IDE generated.

There was one point that given the discussions after the seminar, I feel I should clarify. I went into a bit of detail describing the SQL Express only feature "User Instances" or RANU (Run As a Normal User). I do think it is an interesting feature and I can see some real potential for it, however, from the discussions after the seminar, it appears that I didn’t stress enough, that User Instances are optional (in the connection string "User Instance = true"). You don't need to use it, in fact, I would suggest that in the majority of usage scenarios, you will use SQL Server Express in your traditional Database server scenarios where you have a dedicated server machine that a DBA logs on to allowing her to perform maintenance tasks like Attaching databases etc... multiple clients would then log onto the database from their own machines with only the privileges they require to perform the tasks they need to. User Instances are only really required when you want to deploy an application in a single user type scenario, and you want to deploy the database file along with the executables and have the user (who by best practice in security, should NOT be an administrator on their own machine) attach dynamically to the database. I hope this clears things up.

For a really good, in depth article on User Instances, see Roger Wolter's msdn article on SQL Express User Instances.

By the way if any of the people in the seminar find my blog, please feel free to leave any feedback on my performance, I would like to know how to improve my presenting.

There was one point that given the discussions after the seminar, I feel I should clarify. I went into a bit of detail describing the SQL Express only feature "User Instances" or RANU (Run As a Normal User). I do think it is an interesting feature and I can see some real potential for it, however, from the discussions after the seminar, it appears that I didn’t stress enough, that User Instances are optional (in the connection string "User Instance = true"). You don't need to use it, in fact, I would suggest that in the majority of usage scenarios, you will use SQL Server Express in your traditional Database server scenarios where you have a dedicated server machine that a DBA logs on to allowing her to perform maintenance tasks like Attaching databases etc... multiple clients would then log onto the database from their own machines with only the privileges they require to perform the tasks they need to. User Instances are only really required when you want to deploy an application in a single user type scenario, and you want to deploy the database file along with the executables and have the user (who by best practice in security, should NOT be an administrator on their own machine) attach dynamically to the database. I hope this clears things up.

For a really good, in depth article on User Instances, see Roger Wolter's msdn article on SQL Express User Instances.

By the way if any of the people in the seminar find my blog, please feel free to leave any feedback on my performance, I would like to know how to improve my presenting.

Monday, November 07, 2005

VS2005 SQL Server 2005 RC/RTM Gotcha with MARS

For those who are familiar with MARS (Multiple Active Result Sets) and have been excitedly using them with versions of visual studio 2005 prior to the the june CTP, you may be interested to know that there has been a change in the default behaviour.

MARS is OFF by default, and any code written to use it as in this code project article will cause an exception if you don't explicitly add the following to your connection string "MultipleActiveResultSets=true".

The exception you will receive is "There is already an open DataReader associated with this Connection which must be closed first".

Another resource of interest is "Getting the MARS Sample working in the june CTP".

MARS is OFF by default, and any code written to use it as in this code project article will cause an exception if you don't explicitly add the following to your connection string "MultipleActiveResultSets=true".

The exception you will receive is "There is already an open DataReader associated with this Connection which must be closed first".

Another resource of interest is "Getting the MARS Sample working in the june CTP".

Saturday, November 05, 2005

Blogging on Blogging

I don't usually blog about blogging, I find it a little too self referential for my liking, but last weekend I was talking to a friend about blogs and he told me he didn't particularly like the idea. His explanation of why he didn't see blogs as a valuable social construct went along the lines that on one side of the spectrum you had articles in journals which had high peer review, but were extremely narrow in scope. Blogs were at the complete opposite end of the spectrum, extremely wide in scope, but absolutely no peer review.

This got me thinking, and caused me to re-read the article that got me into blogging in the first place. I find it strange that whenever I mention Joi Ito, or his paper "Emergent Democracy" even to active bloggers, I get blank looks. I think it's a must read for any serious blogger. In the paper Joi Ito argues for the emergent nature of blogging and related social technologies. By emergent it is taken to mean the "self organising ability of complex systems". In refutation to my friends objection to blogs, I'd like to quote directly from the paper.

"Noise in the system is suppressed, and signal amplified. Peers read the operational chatter at Mayfield's creative network layer. At the social network layer, bloggers scan the weblogs of their 150 acquaintances and pass the information they deem significant up to the political networks. The political networks have a variety of local maxima which represent yet another layer. Because of the six degrees phenomenon, it requires very few links before a globally significant item has made it to the top of the power curve. This allows a great deal of specialization and diversity to exist at the creative layer without causing disruptive noise at the political layer."

I concede that any individual blog article by any individual blogger in and of itself has not been peer reviewed, and this is the way it should be. However the blogsphere by its very nature will self organise in such a way that it will give the effect of peer review / critique. I don't think we have reached utopia yet (my entry in this years "understatement of the year competition"), and I believe that there is still a way to go, still new technologies that are required, different online social structures to be explored, but when I originally read "Emergent Democracy" I was captured by the vision.

This got me thinking, and caused me to re-read the article that got me into blogging in the first place. I find it strange that whenever I mention Joi Ito, or his paper "Emergent Democracy" even to active bloggers, I get blank looks. I think it's a must read for any serious blogger. In the paper Joi Ito argues for the emergent nature of blogging and related social technologies. By emergent it is taken to mean the "self organising ability of complex systems". In refutation to my friends objection to blogs, I'd like to quote directly from the paper.

"Noise in the system is suppressed, and signal amplified. Peers read the operational chatter at Mayfield's creative network layer. At the social network layer, bloggers scan the weblogs of their 150 acquaintances and pass the information they deem significant up to the political networks. The political networks have a variety of local maxima which represent yet another layer. Because of the six degrees phenomenon, it requires very few links before a globally significant item has made it to the top of the power curve. This allows a great deal of specialization and diversity to exist at the creative layer without causing disruptive noise at the political layer."

I concede that any individual blog article by any individual blogger in and of itself has not been peer reviewed, and this is the way it should be. However the blogsphere by its very nature will self organise in such a way that it will give the effect of peer review / critique. I don't think we have reached utopia yet (my entry in this years "understatement of the year competition"), and I believe that there is still a way to go, still new technologies that are required, different online social structures to be explored, but when I originally read "Emergent Democracy" I was captured by the vision.

Friday, October 07, 2005

Speaking at VS Live

Well, this one hit me out of the blue. I have been asked to speak at the Sydney VS Live conference on SQL Server Express.

My fellow colleague Greg Low dobbed me in for this one, he made the assumption that because I've been working on a commercial project with SQL Server Express since January of this year, I might actually know something about it.

It's all very exciting, but I'm a bit nervous about it all, I haven't done any public speaking for quite some time now. I have a few ideas on what I want to talk about, but I'd love some feedback as to what other people want to hear about SQL Server Express.

My fellow colleague Greg Low dobbed me in for this one, he made the assumption that because I've been working on a commercial project with SQL Server Express since January of this year, I might actually know something about it.

It's all very exciting, but I'm a bit nervous about it all, I haven't done any public speaking for quite some time now. I have a few ideas on what I want to talk about, but I'd love some feedback as to what other people want to hear about SQL Server Express.

DB Performance tip

Normally when I think of DB Performance, I usually concerntrate on indexes, query structure, etc... all of which are quite important, but I have started listening to an msdn web cast series called "A Primer to Proper Sql Server Development" by Kimberly Trip, and in the very first episode was able to find the answer to a performance issue that had been puzzling me and the developers I'm currently working with for some months now.

The problem was that our application that is using SQL Express was having some serious performance issues. It was slow to start up, it took ages to save to the database the first few times, but then things seem to pick up a little, but not quite as much as would be ideal. Any one want to guess what the problem was????? I know all you SQL Server guru's out there have it already, but for the benefit of those who don't.... pre-allocating the size of the log file fixed the problem quite nicely.

We were already pre-allocating the size of our primary datafile to 4MB, so when we used the CREATE DATABASE ... FOR ATATCH statement, the logfile would be created by default at 25% the size of the primary datafile.... 500KB. The application is very database intensive, and filled up the log file very very quickly, and by default, the logfile would auto grow by 10% of it's original size... which would fill up very quickly again, and then auto grow by another 10% etc.... Of course this growing of the log file is quite costly, and we found that by setting the log file to be 4MB by default, with a 4MB file growth, we were able to achieve quite a significant improvement. We cut the initial loading by half, and the first few saves are now instantaneous.

If your working with SQL Server, then I'd highly recommend the web case series, there are lots of other useful tips and hints.

The problem was that our application that is using SQL Express was having some serious performance issues. It was slow to start up, it took ages to save to the database the first few times, but then things seem to pick up a little, but not quite as much as would be ideal. Any one want to guess what the problem was????? I know all you SQL Server guru's out there have it already, but for the benefit of those who don't.... pre-allocating the size of the log file fixed the problem quite nicely.

We were already pre-allocating the size of our primary datafile to 4MB, so when we used the CREATE DATABASE ... FOR ATATCH statement, the logfile would be created by default at 25% the size of the primary datafile.... 500KB. The application is very database intensive, and filled up the log file very very quickly, and by default, the logfile would auto grow by 10% of it's original size... which would fill up very quickly again, and then auto grow by another 10% etc.... Of course this growing of the log file is quite costly, and we found that by setting the log file to be 4MB by default, with a 4MB file growth, we were able to achieve quite a significant improvement. We cut the initial loading by half, and the first few saves are now instantaneous.

If your working with SQL Server, then I'd highly recommend the web case series, there are lots of other useful tips and hints.

Tuesday, September 13, 2005

Pointless rant against the full sale of Telstra

As Telstras last few remaining shares are sold off at the hands of a party that really should be there to protect rural Australia from the whims of narrow minded economic rationalism, I'd like to take this opportunity (not so much as a eulogy, however apt the metaphor, more as an exercise in futility) to express a few of my (increasingly pointless) objections.

The free market, according to the economists, is meant to maximize profit. Ignoring the fact that there are no guarantees about how that profit will be distributed, there are a number of axioms that underpins this assumption. One of those axioms is that there are no externalities, or at least if there are, they are compensated. This is quite a big assumption even for some of the simplest economic transactions, but when we come to communications, this axiom doesn't even begin to stand up.

There are numerous externalities most of which are so intangible they can't even begin to be compensated. One of the externalities that most concerns me is that communications is such a valuable resource resource not only to building communities, but also to business in general. The governments 2 billion dollar trust fund is to get current services to the bush, however, being a technology professional, I know how quickly communications technologies change. Telstra are even struggling to get adequate mobile coverage to rural areas, let alone other technologies such as broard band internet. The communications technologies of tomorrow is what will drive business, and without the power to regulate the egalitarian dispersion of these technologies, certain sectors of the community that, those that a privatized company can't see immediate profits to pass on to their share holders. This I believe has the potential to seriously impact on Australia as a whole.

The free market, according to the economists, is meant to maximize profit. Ignoring the fact that there are no guarantees about how that profit will be distributed, there are a number of axioms that underpins this assumption. One of those axioms is that there are no externalities, or at least if there are, they are compensated. This is quite a big assumption even for some of the simplest economic transactions, but when we come to communications, this axiom doesn't even begin to stand up.

There are numerous externalities most of which are so intangible they can't even begin to be compensated. One of the externalities that most concerns me is that communications is such a valuable resource resource not only to building communities, but also to business in general. The governments 2 billion dollar trust fund is to get current services to the bush, however, being a technology professional, I know how quickly communications technologies change. Telstra are even struggling to get adequate mobile coverage to rural areas, let alone other technologies such as broard band internet. The communications technologies of tomorrow is what will drive business, and without the power to regulate the egalitarian dispersion of these technologies, certain sectors of the community that, those that a privatized company can't see immediate profits to pass on to their share holders. This I believe has the potential to seriously impact on Australia as a whole.

Monday, August 29, 2005

ADSL - the saga continues

Well after my previous hassles (here and here), I was at my wits end. I had pretty much eliminated most variables before I’d even rang my ISP, but after they insisted I had my modem double checked by plugging it into a friends ADSL line and watching it work, the only variable was the alarm system. When we moved in, there were no instructions on the alarm system, and to be honest, it wasn’t high on my priority list, but the tech support person at my ISP insisted that I get it checked out because it may still be plugged in to the phone line and causing interference. Well, I checked the alarm myself and couldn’t find any connection to the phone line, but then again, I’m not a trained alarm system technician so what would I know, and as the tech support person at my ISP kindly informed me, "If Telstra send a technician out and they find out that it’s your fault, they’ll charge you $99". So I had an alarm technician come around and verify ($75 later) what I already knew, the alarm was not plugged in to the phone line. So finally the coast was clear and I could insist on them sending a Telstra technician out… still with the warning of being charged if Telstra concluded that the issue was anything to do with my setup. Like all service companies these days Telstra would only give a 4 hour window, during which they would be performing the work, this meant that I was required to stay home from work during this time. Fortunately the technician called me fairly early in that period to tell me “yeah mate, it should be right now, there was a problem at the exchange”. And sure enough I now have ADSL internet access again.

Having ascertained that it was Telstra’s fault, and having been threatened with being charged if it was my fault, I am just wondering if I should send Telstra a bill for my time and the expenses I’ve had to incur to determine that Telstra didn’t do a proper job in the first place. Another thing that has crossed my mind is would I have got the same run around had I been a bigpond customer, ie is this Telstra’s way of using their monopoly on the network to make it difficult for other ISP’s to compete on an even footing. Maybe that’s a bit too much of a conspiracy theory, and Occam’s razor dictates that possibly a mixture of incompetence and/or apathy may be a better explanation, which essentially reduces to Hanlon’s Razor "Never attribute to malice that which is adequately explained by stupidity".

Having ascertained that it was Telstra’s fault, and having been threatened with being charged if it was my fault, I am just wondering if I should send Telstra a bill for my time and the expenses I’ve had to incur to determine that Telstra didn’t do a proper job in the first place. Another thing that has crossed my mind is would I have got the same run around had I been a bigpond customer, ie is this Telstra’s way of using their monopoly on the network to make it difficult for other ISP’s to compete on an even footing. Maybe that’s a bit too much of a conspiracy theory, and Occam’s razor dictates that possibly a mixture of incompetence and/or apathy may be a better explanation, which essentially reduces to Hanlon’s Razor "Never attribute to malice that which is adequately explained by stupidity".

Friday, August 26, 2005

Quality on the web

I recently was asked to review some changes to a corporate website, and it got me thinking about what I expect for web sites from a quality perspective.

One thing that I think should go without saying, (but obviously doesn’t) is validation. I would say at least the 2 biggies xhtml and css validation are a must. These validators check through either your xhtml or css code and verify it against the generally accepted standard. Now if your designing a site that you know is ONLY going to be run with Internet Explorer, or whatever browser happens to be the flavour of the month for you target audience then fine, code to that browser if you really must, however, if your website is public facing you have no such control, and with mobile devices becoming more and more popular, you can never guarantee what your web site will be viewed under. I challenge anyone reading this blog to validate their corporate website, you’ll probably be very surprised what you’ll find, I know I was surprised just doing a minimal amount of research for this article.

I actually made the suggestion in the review that anyone doing web development should have every browser known to mankind and a few others installed on their machine, NOT JUST INTERNET EXPLORER. I am always frustrated when I’m using firefox, and I come across something that doesn’t look quite right, and have to exit and view it in ie.

Another big thing for me is that tables should NEVER be used for formatting. Tables are great... For tabular data. Nothing else in xhtml renders tabular data more elegantly and describes it more precisely than tables. But when it comes to formatting they suck. I know in the dark ages of web development there was little other choice and I myself used them prolifically before I stumbled upon the css zengarden. This is one of my favourite sites ever, and gave me the impetuous I required to sit down and learn css properly. I can also recommend a book "HTML Utopia : Design Without Tables Using Css". The other advantage as can be seen by css zengarden is that if you write your page properly, when marketing come down and say "we want to change our corporate look" as marketing are want to do (they have to justify their existence during the quiet periods somehow), it is a matter of replacing a few css files and putting up the new corporate logo, and suddenly the entire website looks completely different. You can then spend the rest of the month it would take for someone who formatted their website with tables doing something far more productive like getting your site up to accessibility standards like 508 or WCAG 1.0.

One thing that I think should go without saying, (but obviously doesn’t) is validation. I would say at least the 2 biggies xhtml and css validation are a must. These validators check through either your xhtml or css code and verify it against the generally accepted standard. Now if your designing a site that you know is ONLY going to be run with Internet Explorer, or whatever browser happens to be the flavour of the month for you target audience then fine, code to that browser if you really must, however, if your website is public facing you have no such control, and with mobile devices becoming more and more popular, you can never guarantee what your web site will be viewed under. I challenge anyone reading this blog to validate their corporate website, you’ll probably be very surprised what you’ll find, I know I was surprised just doing a minimal amount of research for this article.

I actually made the suggestion in the review that anyone doing web development should have every browser known to mankind and a few others installed on their machine, NOT JUST INTERNET EXPLORER. I am always frustrated when I’m using firefox, and I come across something that doesn’t look quite right, and have to exit and view it in ie.

Another big thing for me is that tables should NEVER be used for formatting. Tables are great... For tabular data. Nothing else in xhtml renders tabular data more elegantly and describes it more precisely than tables. But when it comes to formatting they suck. I know in the dark ages of web development there was little other choice and I myself used them prolifically before I stumbled upon the css zengarden. This is one of my favourite sites ever, and gave me the impetuous I required to sit down and learn css properly. I can also recommend a book "HTML Utopia : Design Without Tables Using Css". The other advantage as can be seen by css zengarden is that if you write your page properly, when marketing come down and say "we want to change our corporate look" as marketing are want to do (they have to justify their existence during the quiet periods somehow), it is a matter of replacing a few css files and putting up the new corporate logo, and suddenly the entire website looks completely different. You can then spend the rest of the month it would take for someone who formatted their website with tables doing something far more productive like getting your site up to accessibility standards like 508 or WCAG 1.0.

Sunday, August 21, 2005

More hassles

I can't believe what has happened over the past few days.

After my previous hassles, I patiently waited til Friday. According to my ISP's web site they would notify me on a designated phone number I provided when I filled out the online form requesting my change of phone line. I guess I was expecting an actual human (of some description) to call me and pleasantly inform me that I was once again connected to the information super highway, but why pay someone to talk when a simple automated SMS will do. OK I can handle the SMS, but what I find completely unacceptable is after my previous argument with them over them not divulging information to me because I wasn't the person who set up the phone line with Telstra, they have the ordacity to send an SMS with my user name and...... PASSWORD in plain text. I am seriously concerned now that they have no real idea about security.

To add further to my problems, I hadn't actually unpacked my ADSL modem from the move, and of course we've got boxes every where, so I spent most of Saturday trying to find my modem, and when I finally got it unpacked..... I couldn't get things working. I've been pretty busy this weekend looking at furniture for the new place so I haven't had a great deal of time, so I decided to wait til I came home tonight to go through all the different possibilities and test all my cabling etc.... before I call my ISP and spend 30 mins waiting in the phone queue to maybe find an answer. Well the good news is I didn't spend 30 mins in the queue, the bad news is that the reason for this was the call centre closed at 6pm. So til tomorrow after work, I'm still on dial up.

This is getting beyond a joke.

After my previous hassles, I patiently waited til Friday. According to my ISP's web site they would notify me on a designated phone number I provided when I filled out the online form requesting my change of phone line. I guess I was expecting an actual human (of some description) to call me and pleasantly inform me that I was once again connected to the information super highway, but why pay someone to talk when a simple automated SMS will do. OK I can handle the SMS, but what I find completely unacceptable is after my previous argument with them over them not divulging information to me because I wasn't the person who set up the phone line with Telstra, they have the ordacity to send an SMS with my user name and...... PASSWORD in plain text. I am seriously concerned now that they have no real idea about security.

To add further to my problems, I hadn't actually unpacked my ADSL modem from the move, and of course we've got boxes every where, so I spent most of Saturday trying to find my modem, and when I finally got it unpacked..... I couldn't get things working. I've been pretty busy this weekend looking at furniture for the new place so I haven't had a great deal of time, so I decided to wait til I came home tonight to go through all the different possibilities and test all my cabling etc.... before I call my ISP and spend 30 mins waiting in the phone queue to maybe find an answer. Well the good news is I didn't spend 30 mins in the queue, the bad news is that the reason for this was the call centre closed at 6pm. So til tomorrow after work, I'm still on dial up.

This is getting beyond a joke.

Thursday, August 18, 2005

Winge about ADSL hassles

I haven’t blogged in a while, and there is a damn good reason why. I have been in the middle of moving house. My partner and I have recently purchased a fantastic place in Fitzroy (inner city Melbourne).

The moving process is quite stressful, but I must confess I wasn’t expecting so much hassle with my ADSL line. We needed to get a new phone line at our new place, and so of course we had to switch our ADSL plan to that new line. We made this request on Monday the 8th of august, and waited…. Now I was under the impression that this would take up to a week. I have to confess that I think this is a particularly ridiculous amount of time considering that there is not really all that much work involved. They have to test the line, and then re-route my connection to my ISP. Sounds like all of about half an hour to me, and the fact that I had paid $99 for the work to be done, I would think that Telstra would be able to afford to resource this role fairly well….. We are still waiting for this to happen. On calling my ISP, he gave me the standard response of “Telstra say that it will take between 5 and 10 working days”. This is completely ridiculous, I’m an IT professional and because I move I can’t have an ADSL connection for 2 weeks. I do have dial up access, but that’s not a real internet connection…. Ever tried downloading webcasts and podcasts over dial up, it’s plain infuriating. To make matters worse, they actually wouldn’t give me any specific details of the status of my request because the my previous phone line was registered under my partners name. This I was told was for security reasons…. Lets see now, the guy at the help desk of my ISP had already asked me my address, the account name and my phone number, he then proceeded to ask me if I was Androniki Papapetrou, my partner (obviously not realizing that Androniki is a greek girls name (the male equivalent being Andronicus)). If at this point Id’ve said “Yes that’s me” he would have proceeded to divulge the information I required, but stupid honest me said “No that’s my partner”. So after he insisted that he wouldn’t tell me for security reasons, I got my partner to ring. I had to tell her our account name as she didn’t know that, and when she rang the questions they asked to verify that she was who she said she was….. account name, address and telephone number. Hmmmm top notch security there guys, if this information was genuinely sensitive, then we’re in a lot of trouble here. I think this incident raises a lot of questions about security. I am a firm believer in privacy, but if you really need to identify which pieces of information should be secure and then secure them appropriately, the rest can just be broadcast on your website…. It’ll save people so much frustration.

The latest on our ADSL connection is that it should be ready by Friday….. thanks Telstra.

The moving process is quite stressful, but I must confess I wasn’t expecting so much hassle with my ADSL line. We needed to get a new phone line at our new place, and so of course we had to switch our ADSL plan to that new line. We made this request on Monday the 8th of august, and waited…. Now I was under the impression that this would take up to a week. I have to confess that I think this is a particularly ridiculous amount of time considering that there is not really all that much work involved. They have to test the line, and then re-route my connection to my ISP. Sounds like all of about half an hour to me, and the fact that I had paid $99 for the work to be done, I would think that Telstra would be able to afford to resource this role fairly well….. We are still waiting for this to happen. On calling my ISP, he gave me the standard response of “Telstra say that it will take between 5 and 10 working days”. This is completely ridiculous, I’m an IT professional and because I move I can’t have an ADSL connection for 2 weeks. I do have dial up access, but that’s not a real internet connection…. Ever tried downloading webcasts and podcasts over dial up, it’s plain infuriating. To make matters worse, they actually wouldn’t give me any specific details of the status of my request because the my previous phone line was registered under my partners name. This I was told was for security reasons…. Lets see now, the guy at the help desk of my ISP had already asked me my address, the account name and my phone number, he then proceeded to ask me if I was Androniki Papapetrou, my partner (obviously not realizing that Androniki is a greek girls name (the male equivalent being Andronicus)). If at this point Id’ve said “Yes that’s me” he would have proceeded to divulge the information I required, but stupid honest me said “No that’s my partner”. So after he insisted that he wouldn’t tell me for security reasons, I got my partner to ring. I had to tell her our account name as she didn’t know that, and when she rang the questions they asked to verify that she was who she said she was….. account name, address and telephone number. Hmmmm top notch security there guys, if this information was genuinely sensitive, then we’re in a lot of trouble here. I think this incident raises a lot of questions about security. I am a firm believer in privacy, but if you really need to identify which pieces of information should be secure and then secure them appropriately, the rest can just be broadcast on your website…. It’ll save people so much frustration.

The latest on our ADSL connection is that it should be ready by Friday….. thanks Telstra.

Sunday, July 17, 2005

Evaluating VSTS

Well, today I finally start evaluating Visual Studio Team System. Yesterday I upgraded my PC to 2 Gig of RAM as the Virtual PC image with VSTS beta 2 requires 1.5 Gig on it's own.

I am excited about the new version control system (SCC) mainly because having worked with Visual Source Safe in previous projects, I am all too aware of the need for a decent offering from Microsoft in the Source Control department, although I am going to be quite hard to please given that I have been using Subversion for my own projects for some time now, and have found it to be very good.

I am also interested in the unit testing and code coverage modules.

I have to confess though, I don't think the productivity gains are going to be quite as dramatic as the Microsoft hype is making out. The best that we can hope for is that with the tighter integration of all these aspects of the SDLC, there will be a small time saving in people not having to switch between applications to perform development related tasks, and that reporting on project status will become easier.

There are a few reasons I believe this.

- A good, productive software development team will already have processes in place that suit there projects, their tool set and their team structure, they will already have worked out procedures around the fact that their tools aren't completely integrated, so the integration of the tools can save a small amount of time here.

- A good team will also be disciplined in following procedure, If a code review or static code analysis is required before checkin, a disciplined team will adhere to this, and do not really need software to tell them that they've forgotten to write unit tests. In this case the only benefit I can see is when new staff are introduced to the development cycle, the procedures will be more evident and they may learn them quicker.

- A good development team will have good leadership that will keep an eye on the processes tweaking them if there are issues with the code produced, or if the procedures are becoming too burdensome.

- A bad development team would have discipline problems that require more than just some software rules to solve. In my experience, where there is a lack of discipline, it is either out of laziness, or a lack of understanding of the reasons behind the procedures and policies. Laziness is a serious problem, however a lack of understanding can be solved only through education and experience.

- A good team will have communications in place. One presentation I've seen suggests that with VSTS there will be no more need for weekly status meetings because the work item tracking and the automatic reporting will be enough to display status. Maybe the actual status reporting in such meetings could be simplified, but I have always found these kinds of meetings vital as they provide a forum for everybody, (not just the project manager) to get a feel for what is going on across the entire project, so developer X might be complaining about a problem he's having and developer Y says that he has just finished some code that solves a similar problem, and would just require some re-factoring to solve his particular issue, while tester A is struggling with a particular concept in the use cases, so one of the BA's offers to explain it a bit more thoroughly for the entire team who then suggest she re-word the use case slightly. These kinds of informal meetings are invaluable to a dev team, and until we start writing software with advanced AI, they cannot be replaced by software.

Anyway, I still think VSTS will be a worthwhile tool, but I just think we need to be realistic about how far software tools can take us, and we need to not under estimate the human side of software development.

I am excited about the new version control system (SCC) mainly because having worked with Visual Source Safe in previous projects, I am all too aware of the need for a decent offering from Microsoft in the Source Control department, although I am going to be quite hard to please given that I have been using Subversion for my own projects for some time now, and have found it to be very good.

I am also interested in the unit testing and code coverage modules.

I have to confess though, I don't think the productivity gains are going to be quite as dramatic as the Microsoft hype is making out. The best that we can hope for is that with the tighter integration of all these aspects of the SDLC, there will be a small time saving in people not having to switch between applications to perform development related tasks, and that reporting on project status will become easier.

There are a few reasons I believe this.

- A good, productive software development team will already have processes in place that suit there projects, their tool set and their team structure, they will already have worked out procedures around the fact that their tools aren't completely integrated, so the integration of the tools can save a small amount of time here.

- A good team will also be disciplined in following procedure, If a code review or static code analysis is required before checkin, a disciplined team will adhere to this, and do not really need software to tell them that they've forgotten to write unit tests. In this case the only benefit I can see is when new staff are introduced to the development cycle, the procedures will be more evident and they may learn them quicker.

- A good development team will have good leadership that will keep an eye on the processes tweaking them if there are issues with the code produced, or if the procedures are becoming too burdensome.

- A bad development team would have discipline problems that require more than just some software rules to solve. In my experience, where there is a lack of discipline, it is either out of laziness, or a lack of understanding of the reasons behind the procedures and policies. Laziness is a serious problem, however a lack of understanding can be solved only through education and experience.

- A good team will have communications in place. One presentation I've seen suggests that with VSTS there will be no more need for weekly status meetings because the work item tracking and the automatic reporting will be enough to display status. Maybe the actual status reporting in such meetings could be simplified, but I have always found these kinds of meetings vital as they provide a forum for everybody, (not just the project manager) to get a feel for what is going on across the entire project, so developer X might be complaining about a problem he's having and developer Y says that he has just finished some code that solves a similar problem, and would just require some re-factoring to solve his particular issue, while tester A is struggling with a particular concept in the use cases, so one of the BA's offers to explain it a bit more thoroughly for the entire team who then suggest she re-word the use case slightly. These kinds of informal meetings are invaluable to a dev team, and until we start writing software with advanced AI, they cannot be replaced by software.

Anyway, I still think VSTS will be a worthwhile tool, but I just think we need to be realistic about how far software tools can take us, and we need to not under estimate the human side of software development.

Sunday, July 10, 2005

The code wars have begun

Fellow collegues Mitch Denny and Joseph Cooney have started the "Iron Coder" challenge over the question is it better to sub-class or use extender providers. It will be a very interesting battle, with dark lord Darth Denny defending extender providers, and the young Jedi Joseph upholding the cause for sub-classing.

Use the source young Jedi.

Use the source young Jedi.

Friday, July 08, 2005

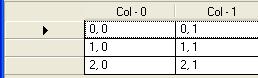

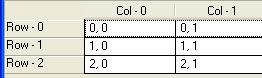

Great use for nested classes

When I first read about nested classes I thought it was just one of those obscure parts of c# that I was never likely to find a decent use for, but today I found quite a good use for it, and it was sitting right there in my previous post.

Usually we use a custom control to contain an UltraGrid like so

In my last post I offered a solution to adding record headers to an UltraGrid, but I passed the RowHeaders in as a List parameter to the constructor, now in the problem I'm trying to solve, this list is already maintained by the user control, so it means I have to maintain in 2 separate places..... Well not any more

Then at the business end of things in DrawElement I use the following

This means that I can let the user control maintain the row names and no-one ever needs to know about our RowSelectorDrawFilter class as it's happily nested in MyControlClass.

Usually we use a custom control to contain an UltraGrid like so

public class MyControl : UserControl

{

...

private UltraGrid myGrid;

}

In my last post I offered a solution to adding record headers to an UltraGrid, but I passed the RowHeaders in as a List

public class MyControl : UserControl

{

...

private list myRowNames;

private UltraGrid myGrid;

private class RowSelectorDrawFilter : IUIElementDrawFilter

{

private RowSelectorDrawFilter(MyControl control)

{

myControl = control;

}

...

private MyControl myControl;

}

}

Then at the business end of things in DrawElement I use the following

...

drawParams.DrawString(drawParams.Element.Rect, myControl.myRowNames[row.ListIndex], false, false);

...

This means that I can let the user control maintain the row names and no-one ever needs to know about our RowSelectorDrawFilter class as it's happily nested in MyControlClass.

Saturday, July 02, 2005

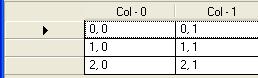

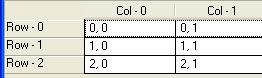

Row Headers in infragistics UltraGrid control

A problem was puzzling me with the infragistics UltraGrid control the other day, how to get row headers in the grid?

My first thought was "well the UltraGrid is such a huge control, there must be some property somewhere I can set to do this", something like.... myGrid.DisplayLayout.Rows[i].Header = "Row Name" or myGrid.DisplayLayout.Override.RowSelectors[i].Text = "Row Name" etc.... I spent ages looking all to no avail.

After all else failed, I resorted (as a last ditch attempt) to the infragistics knowledge base where I found this article.

Now this just talks about getting rid of the pencil, but slightly adapt it and you get the following

then add the following line to the OnLoad method

and providing the row names list is in the same order as the underlying table, it all just works. It is important that row.ListIndex is used to index into the rowNames array instead of row.Index, as row.Index is the display index, and if the grid is sorted, the row names will not be sorted.

Hope this helps someone else out there.

My first thought was "well the UltraGrid is such a huge control, there must be some property somewhere I can set to do this", something like.... myGrid.DisplayLayout.Rows[i].Header = "Row Name" or myGrid.DisplayLayout.Override.RowSelectors[i].Text = "Row Name" etc.... I spent ages looking all to no avail.

After all else failed, I resorted (as a last ditch attempt) to the infragistics knowledge base where I found this article.

Now this just talks about getting rid of the pencil, but slightly adapt it and you get the following

class RowSelectorDrawFilter : IUIElementDrawFilter

{

public RowSelectorDrawFilter(List<string> rowNames)

{

myRowNames = rowNames;

}

public DrawPhase GetPhasesToFilter(ref UIElementDrawParams drawParams)

{

// Drawing RowSelector Call DrawElement Before the Image is Drawn

if (drawParams.Element is Infragistics.Win.UltraWinGrid.RowSelectorUIElement)

return DrawPhase.BeforeDrawImage;

else

return DrawPhase.None;

}

public bool DrawElement(DrawPhase drawPhase, ref UIElementDrawParams drawParams)

{

// If the image isn't drawn yet, and the UIElement is a RowSelector

if (drawPhase == DrawPhase.BeforeDrawImage && drawParams.Element is Infragistics.Win.UltraWinGrid.RowSelectorUIElement)

{

// Get a handle of the row that is being drawn

UltraGridRow row = (UltraGridRow)drawParams.Element.GetContext(typeof(UltraGridRow));

drawParams.DrawString(drawParams.Element.Rect, myRowNames[row.ListIndex], false, false);

return true;

}

// Else return false, to draw as normal

return false;

}

private List<string> myRowNames = null;

}

then add the following line to the OnLoad method

myTableGrid.DrawFilter = new RowSelectorDrawFilter(myRowNames);

and providing the row names list is in the same order as the underlying table, it all just works. It is important that row.ListIndex is used to index into the rowNames array instead of row.Index, as row.Index is the display index, and if the grid is sorted, the row names will not be sorted.

Hope this helps someone else out there.

Monday, June 27, 2005

Simple fix for nunit

The simple fix for the nunit problem was to find someone with nunit already installed, and copy the binaries across onto my machine, everything works fine.

PS oh and load nunit.core.dll and nunit.framework.dll into the GAC using gacutil.exe

PS oh and load nunit.core.dll and nunit.framework.dll into the GAC using gacutil.exe

Saturday, June 25, 2005

Beta 2 woes

Well, it's been another interesting week at the bleeding edge of technology.

I discovered a problem with one of the third party controls we were using that caused events to fire in a different order to what we were expecting. This issue only occurred on my laptop, and just to prove it wasn't hardware, I ran it on a VPC on my laptop, and it worked as expected. After much searching and gnashing of teeth, I decided that a reformat was in order. As discussed earlier, I have had some issues with the upgrade from beta 1 to beta 2 of visual studio 2005, and figured that it could well be some framework issue, especially since the third party component used .net 1.0, and we were developing for .net 2.0 beta 2. I figured it would also give me the advantage of being the only one in the office with a completely clean install of visual studio 2005 beta 2.

So after half a day of re-installing my system, I finally am back to a place where I can develop. The biggest issue however is that I can't install nunit because the installer won't run unless it sees the .Net 2.0 framework beta 1. As I have mentioned earlier, I'm a bit of a unit test fanatic, and without it I feel crippled. My first thought was, grab the source and compile it under vs 2005 beta 2. No such luck, bits of it compile such as the core nunit framework, but not the actual nunit GUI.

Oh well, I guess I'll just keep limping along until the guys at nunit release a new version.

I discovered a problem with one of the third party controls we were using that caused events to fire in a different order to what we were expecting. This issue only occurred on my laptop, and just to prove it wasn't hardware, I ran it on a VPC on my laptop, and it worked as expected. After much searching and gnashing of teeth, I decided that a reformat was in order. As discussed earlier, I have had some issues with the upgrade from beta 1 to beta 2 of visual studio 2005, and figured that it could well be some framework issue, especially since the third party component used .net 1.0, and we were developing for .net 2.0 beta 2. I figured it would also give me the advantage of being the only one in the office with a completely clean install of visual studio 2005 beta 2.

So after half a day of re-installing my system, I finally am back to a place where I can develop. The biggest issue however is that I can't install nunit because the installer won't run unless it sees the .Net 2.0 framework beta 1. As I have mentioned earlier, I'm a bit of a unit test fanatic, and without it I feel crippled. My first thought was, grab the source and compile it under vs 2005 beta 2. No such luck, bits of it compile such as the core nunit framework, but not the actual nunit GUI.

Oh well, I guess I'll just keep limping along until the guys at nunit release a new version.

Saturday, June 11, 2005

The beauty of unit tests

Small confession, I only started writing unit tests a couple of years ago, but since then I find myself becoming more and more obsessed with unit tests. The development manager at the company I am currently contracted to has called me a “unit test evangelist”. Why have I become so fanatical in such a short period of time? I guess being interested in the whole development cycle I can see the huge benefits of code that has been properly unit tested. I’d like to list what I see as some of the advantages, in no particular order, and certainly not exhaustive.

- coverage: it is important that whenever a piece of code is written that the underlying logic is tested as soon as possible, and in isolation from the rest of the system.

- Confidence: If a class has been adequately unit tested, then anyone using that class can have a certain level of confidence in using that class.

- Demonstration: When the writer of a class writes unit tests against that class, the unit tests can be used by another developer to understand how the class is supposed (and not supposed in the case of negative unit tests) to be used.

- Shorter System Test: As I’ve mentioned before, the system test phase of the development cycle is the most unpredictable phase of the entire development cycle. If bugs are found by the developer in unit tests, they will never make it to system test.

- Refactoring: in todays “agile” world, refactoring is how a lot of companies enhance their software, but it can lead to bugs if the existing system is not understood properly. If a project has adequate unit test coverage, then any false assumptions made by the developer refactoring will be picked up immediately by the unit tests and will force the developer to understand that bit of the system before continuing.

- Performance analysis: A good set of unit tests can be used as a starting point for performance profiling. I have actually heard of one company that had the tradition that every Friday, all developers would stop what they were doing and concentrate all their efforts on improving the performance of their five slowest unit tests. Even if you don’t do this, you can at least see where your performance problems may lie.

I hear a lot of developers complaining that it’s too much effort to set everything up to a point where their classes can be unit tested. It can be a bit of an effort in some cases if say you’re working with data layer classes that talk to a database, however, it just requires a bit of thought when you start the project, and a lot of the setup work can potentially be re-used in the main application. I have yet to see any case where putting in the extra effort has not paid off. The only exception I can really think of is that retro-fitting unit tests to a piece of existing software can be more pain than it’s worth, however, this shouldn’t be an excuse not to start. My recommendation would be to make the decision that any new piece of functionality should be fully unit tested.

What I would like to do is over the course of the next few weeks I’ll write up a few articles about getting started with unit tests, and focus on a few different options available. If you have already been convinced by more most eloquent argument and want to get started right away, then here are some links to see you on your way.

For .Net developers, there is nunit.

For Java developers there is JUnit, and

For C++ developers, there is C++ Unit

There is even a TSQLUnit for unit testing your Transact SQL.

- coverage: it is important that whenever a piece of code is written that the underlying logic is tested as soon as possible, and in isolation from the rest of the system.

- Confidence: If a class has been adequately unit tested, then anyone using that class can have a certain level of confidence in using that class.

- Demonstration: When the writer of a class writes unit tests against that class, the unit tests can be used by another developer to understand how the class is supposed (and not supposed in the case of negative unit tests) to be used.

- Shorter System Test: As I’ve mentioned before, the system test phase of the development cycle is the most unpredictable phase of the entire development cycle. If bugs are found by the developer in unit tests, they will never make it to system test.

- Refactoring: in todays “agile” world, refactoring is how a lot of companies enhance their software, but it can lead to bugs if the existing system is not understood properly. If a project has adequate unit test coverage, then any false assumptions made by the developer refactoring will be picked up immediately by the unit tests and will force the developer to understand that bit of the system before continuing.

- Performance analysis: A good set of unit tests can be used as a starting point for performance profiling. I have actually heard of one company that had the tradition that every Friday, all developers would stop what they were doing and concentrate all their efforts on improving the performance of their five slowest unit tests. Even if you don’t do this, you can at least see where your performance problems may lie.

I hear a lot of developers complaining that it’s too much effort to set everything up to a point where their classes can be unit tested. It can be a bit of an effort in some cases if say you’re working with data layer classes that talk to a database, however, it just requires a bit of thought when you start the project, and a lot of the setup work can potentially be re-used in the main application. I have yet to see any case where putting in the extra effort has not paid off. The only exception I can really think of is that retro-fitting unit tests to a piece of existing software can be more pain than it’s worth, however, this shouldn’t be an excuse not to start. My recommendation would be to make the decision that any new piece of functionality should be fully unit tested.

What I would like to do is over the course of the next few weeks I’ll write up a few articles about getting started with unit tests, and focus on a few different options available. If you have already been convinced by more most eloquent argument and want to get started right away, then here are some links to see you on your way.

For .Net developers, there is nunit.

For Java developers there is JUnit, and

For C++ developers, there is C++ Unit

There is even a TSQLUnit for unit testing your Transact SQL.

Beta tools

Sometimes I wonder exactly what I have to offer to the development community, I am just a developer the same as thousands of other developers out there, and rather than just add to the blog noise out there, if I don’t feel I have anything new to offer I’d rather not say anything. However, the one thing I am doing that not a lot of developers are doing at the moment, is writing a commercial application using Whidbey (visual studio 2005) and Yukon (sql server 2005). Most developers I know can’t really find the time to do much more than download the beta’s and have a short play before they go back to visual studio 2003 and sql server 2000. So I’ll share some of my experiences using the beta tools. I’ll start the ball rolling by talking about my recent experience upgrading from Visual studio beta1 to beta 2.

The team lead had done the hard yards initially, and was raving how smoothly the upgrade went, as far as code goes, there were a few minor things that needed around dictionaries etc…, but nothing significant. So when it was my turn to upgrade, I thought the process would be relatively smooth. So I started uninstalling stuff… first uninstall our software, then infragistics, then uninstall all the individual components of visual studio, run the cleanup tool (see here for details on what's involved), then uninstall all the components of sql server, as well as the sql server express instance for our software etc…. so by early afternoon, I was ready to start installing things.

Installing vs2005 beta2 was a very long process, so don’t expect to do this in 10 minutes. It’s good to see they have now included sql Mobile in the default install. There was an error at the end installing sql express, but I knew I would have to install our own instance later anyway, so I pushed on regardless. Installed infragistics, then went on to install our product which should have installed the sql instance. It didn’t, so after looking around in the registry we found that the uninstall of the sql instance hadn’t cleaned up the registry. Deleted manually, and attempted again, this time the sql instance install ran, but came up with a fatal error "Service 'SQL Server (instancename)' (MSSQL$instancename) could not be

installed. Verify that you have sufficient privileges to install

system services". I knew I was an administrator on my own machine (what developer isn't), so hit mailing lists and news groups. There were people quite willing to offer advice, but, especially on the sql down under news group, but when fellow Readify employee Greg Low suggested that re-formatting looked like the only option, I started to get a bit worried. However with a bit of patience and some help from one of my work collegues (actually the guy who writes the installer) we managed to figure out that the uninstaller for the sql instance had not removed the service for the named instance of sql server we were using. So hack around in the registry a bit more, and finally the we are up and running. In total 2 days to upgrade from beta 1 to beta 2. everything else seems to be going relatively well, it is a true testament to microsofts commitment that we are able to develop commercial software on there beta tools, but I will make just 1 criticism..... please make error messages more informative, if the error had've said "Cannot install service, an existing service with the same name already exists", I could have saved a day of pain.

The team lead had done the hard yards initially, and was raving how smoothly the upgrade went, as far as code goes, there were a few minor things that needed around dictionaries etc…, but nothing significant. So when it was my turn to upgrade, I thought the process would be relatively smooth. So I started uninstalling stuff… first uninstall our software, then infragistics, then uninstall all the individual components of visual studio, run the cleanup tool (see here for details on what's involved), then uninstall all the components of sql server, as well as the sql server express instance for our software etc…. so by early afternoon, I was ready to start installing things.

Installing vs2005 beta2 was a very long process, so don’t expect to do this in 10 minutes. It’s good to see they have now included sql Mobile in the default install. There was an error at the end installing sql express, but I knew I would have to install our own instance later anyway, so I pushed on regardless. Installed infragistics, then went on to install our product which should have installed the sql instance. It didn’t, so after looking around in the registry we found that the uninstall of the sql instance hadn’t cleaned up the registry. Deleted manually, and attempted again, this time the sql instance install ran, but came up with a fatal error "Service 'SQL Server (instancename)' (MSSQL$instancename) could not be

installed. Verify that you have sufficient privileges to install

system services". I knew I was an administrator on my own machine (what developer isn't), so hit mailing lists and news groups. There were people quite willing to offer advice, but, especially on the sql down under news group, but when fellow Readify employee Greg Low suggested that re-formatting looked like the only option, I started to get a bit worried. However with a bit of patience and some help from one of my work collegues (actually the guy who writes the installer) we managed to figure out that the uninstaller for the sql instance had not removed the service for the named instance of sql server we were using. So hack around in the registry a bit more, and finally the we are up and running. In total 2 days to upgrade from beta 1 to beta 2. everything else seems to be going relatively well, it is a true testament to microsofts commitment that we are able to develop commercial software on there beta tools, but I will make just 1 criticism..... please make error messages more informative, if the error had've said "Cannot install service, an existing service with the same name already exists", I could have saved a day of pain.

Monday, April 25, 2005

Code Camp Oz

Just got back from Code Camp Oz. I fear my journey to the "geek" side is now fully complete.

Had a great time, met some more of my fellow Readify colleagues. It's always good to be able to put a face to the emails and blogs.

I attended most of the seminars.... (couldn't quite bring myself to get to the VB.net talks...... I feel I've done enough VB6 and VBA in the past that if anybody really wanted me to do some VB.Net I could quite easily wing it). There were 2 talks that had me very interested, both on one of my pet topics...... performance. It's good to see that the developer community is taking performance seriously.

Other talks were also very good, and I think given the circumstances, all the speakers did a really good job. Murphy's law always holds true for demonstrations, worship of the demo Gods sometimes helps, but when you're using beta software, sometimes even the demo Gods give up and go home.

Thanks to all those who organised it, it was well worth giving up a weekend for.

Had a great time, met some more of my fellow Readify colleagues. It's always good to be able to put a face to the emails and blogs.

I attended most of the seminars.... (couldn't quite bring myself to get to the VB.net talks...... I feel I've done enough VB6 and VBA in the past that if anybody really wanted me to do some VB.Net I could quite easily wing it). There were 2 talks that had me very interested, both on one of my pet topics...... performance. It's good to see that the developer community is taking performance seriously.

Other talks were also very good, and I think given the circumstances, all the speakers did a really good job. Murphy's law always holds true for demonstrations, worship of the demo Gods sometimes helps, but when you're using beta software, sometimes even the demo Gods give up and go home.

Thanks to all those who organised it, it was well worth giving up a weekend for.

Friday, March 11, 2005

My new laptop

Please forgive the self indulgence, but I thought I'd just post a picture of my new laptop. It's not every day you get a new baby.

For those interested it's an ASUS M6N PM1.8/1Gb/15.4"/80Gb

For those interested it's an ASUS M6N PM1.8/1Gb/15.4"/80Gb

Sunday, March 06, 2005

The performance question

Recently there has been a lively discussion amongst my work collegues at Readify about performance, sparked by a blog article by Rico Mariani. There have been some very interesting points made, but it has prompted me to think about performance. The random thoughts I want to share here, (I am a little too insecure to post my extremely humble (and slightly off topic) opinion to my collegues at Readify), is that dispite all the promises of the computer industry backed up by Moore's Law, in every "serious" project I have been involved in, performance has been a big issue.

At first I put it down to the fact that I was working with Palm OS, and Pocket PC devices and obviuously on memory constrained devices you have to be very careful, but in my current gig, we're writing a PC applicatoin and there are serious concerns about the performance of the system under regular use.

I think that the converse to Moore's Law is that the more powerful we build computers the more ways we find of using that extra power to the point that we are not really much further ahead than we were when computers were slower.

Example, in my previous job, we developed a Pocket PC application which essentially was just a re-write of a Palm OS application we wrote 3 years prior. The PPC app requires a Pocket PC 2002 with 32 Meg of RAM and a 200 MHz processor, and still runs quite slow, where as the Palm Os application was targeted at a Palm OS 3.1 device with 2 Meg of memory and an 8 Mhz processor... and it ran quite fast.

Saturday, February 26, 2005

Unit testing private methods using reflection

Don't know how many other people have come across this problem, but a couple of days ago I was writing a class in C# and I decided I wanted a private method to perform some functionality. I knew the private method would contain some non-trivial logic, but didn't want to expose it to other classes that other developers were working on. I decided that the logic within the method was serious enough to warrant some unit tests, but how could I unit test considering the method was private. Normally I would make the argument that as long as I tested the public interface correctly, then I should be fine, however, I had a suspicion that this time was different, so I sought the advice of my fellow Readify colleagues, and the ever brilliant Mitch Denny came to the rescue with some sample code that used .net reflection to call into the private method.

or in my case more along the lines of

as this was a static private method.

This worked a treat and I very quickly discovered the reason that I'd suspected I should go the extra mile and create this unit test. The very first time I ran my unit tests with just the most basic tests in place, I discovered one of those "doh" type bugs. Had I been attempting to just test the public interface, because there was considerable complexity in the public method that was using this private method I would have been looking in the wrong place for ages trying to debug the public interface, stepping into each line etc..., but because I'd spent 15 minutes setting up the unit test, when I did finally write the unit test for the public method, it worked first time.

Question: So when should you go to the extra effort of using reflection to unit test a private method?

Answer: When the private method contains non-trivial logic, and writing tests for the public interface won't make it obvious what's really happening or you won't be able to get decent code coverage from the public interface tests. There may be other times as well, but this is the rule I'm going to stick to for now.

TestVictim victim = new TestVictim();

Type victimType = typeof(TestVictim);

MethodInfo method =

victimType.GetMethod("PrivateMethodName",

BindingFlags.NonPublic BindingFlags.Instance); //pass parameters in an object array

method.Invoke(victim, . . . .); or in my case more along the lines of

Type victimType = typeof(VictimClass);

MethodInfo =

victimType.GetMethod("PrivateMethodName",

BindingFlags.NonPublic BindingFlags.Static); //pass parameters in an object array

method.Invoke(null, . . . .);

as this was a static private method.

This worked a treat and I very quickly discovered the reason that I'd suspected I should go the extra mile and create this unit test. The very first time I ran my unit tests with just the most basic tests in place, I discovered one of those "doh" type bugs. Had I been attempting to just test the public interface, because there was considerable complexity in the public method that was using this private method I would have been looking in the wrong place for ages trying to debug the public interface, stepping into each line etc..., but because I'd spent 15 minutes setting up the unit test, when I did finally write the unit test for the public method, it worked first time.

Question: So when should you go to the extra effort of using reflection to unit test a private method?

Answer: When the private method contains non-trivial logic, and writing tests for the public interface won't make it obvious what's really happening or you won't be able to get decent code coverage from the public interface tests. There may be other times as well, but this is the rule I'm going to stick to for now.

Wednesday, February 09, 2005

Peer Code Reviews - 2

In my previous article on Peer code reviews I mentioned I didn't like the idea of insisting on a code review before checking into source safe. Well, I still don't like it, but I have discovered one reason which supports the idea.

If developers are left to code and check in/check out as much as they like, and are only required to have a code review before a periodic label, then it becomes tempting to write large chunks of code before getting a code review. now if a reviewer, by definition another developer, is asked to review pages and pages of code, then as the reviewer is also undoubtedly a busy person, they will simlpy use the page down key to scan over vast amounts of code and not really look for anything in particular as to look intently at each peice of code requires significant effort. I guess the conclusion is review early, review often. However, I still believe that there should be very few barriers to checking in building code.

If developers are left to code and check in/check out as much as they like, and are only required to have a code review before a periodic label, then it becomes tempting to write large chunks of code before getting a code review. now if a reviewer, by definition another developer, is asked to review pages and pages of code, then as the reviewer is also undoubtedly a busy person, they will simlpy use the page down key to scan over vast amounts of code and not really look for anything in particular as to look intently at each peice of code requires significant effort. I guess the conclusion is review early, review often. However, I still believe that there should be very few barriers to checking in building code.

Wednesday, February 02, 2005

Peer Code Reviews

Even though I have had 8 years experience in the IT industry, it may or may not surprise you to know that until my current job I have never had a formal peer code review. In the past the only times that a peer has looked at my code has been in a very informal way, and not really looking for the kind of things that should be looked for in a proper peer code review. So as you might guess, I was a little nervous when faced with the prospect of a peer code review, however, I am now completely sold on the idea.

I believe there are 2 elements that make code reviews invaluable to good code production. Firstly, the reviewer has a different perspective on the code. The reviewer is someone with different experiences, and as they are not in the thick of the coding, have time to relax and view the code in a more objective way than the creator of the code. They are not subject to the same line of logic that the coder has gone through to produce the code, so if the coder has written something a certain way after a string of incorrect or sub-optimal logic, the reviewer is free to question the logic, or propose a better way of implementing an algorithm, and the coder then is forced to either justify his logic by tracing through his steps, validating his decisions at each point, or accept the better way and re-code accordingly. The reviewer can also check for consistency with the accepted coding standards, and if the coding standards are good, better adherence will result in more readable, more maintainable code.

The second element is the psychological factor. As a developer, if I know my code is going to be reviewed, I will be very careful to adhere to the accepted coding standards and I will make sure that my code is set out in such a way that it is easy for another person to read. I will also validate my logic, imagining how I will explain complex bits of code to my potential reviewer. And finally I will do a once over of my code before I ask someone to review it, tidying everything up, making sure the review process will go smoothly and there will be no serious issues that the reviewer could potentially pull me up on.

All this doesn’t guarantee bug free code, in fact, the purpose of a code review is not to replace a proper QA process, but it creates an opportunity to catch obvious coding errors that would have otherwise come up in the QA process, and would have extended the whole phase unnecessarily. As the QA cycle is always the largest unknown of any software project, anything that can potentially decrease this phase has to be a good thing.

The only thing I don’t quite agree with is the way in which they use their source control in conjunction with the peer code reviews. Their idea is that there should not be a single line of code under source control that has not been reviewed. This creates an obstacle to checking in regularly. My personal opinion is that there should be very few obstacles to checking in modified source code. At my last work the rules I instated were just the obvious two; only check-in if the project is building (ie don’t break the build), and check-in regularly (usually before you go home at night). I always get nervous if source files are checked out for too long, there are obvious problems that can occur. The issue is that to avoid large amounts of small code reviews developers will wait for multiple days until they have some serious code to review. I think that all though the concept is noble, maybe a similar concept could be achieved by having some simple processes around labeling (or tagging depending on the notation of your source control), that can be administered by the release manager. I'll have to see how the process goes over the next few months, and see if my opinion changes with respects to their source control methodology. One things for sure I'm a convert to peer code reviews.